I discovered NCover over the weekend and wow, am I a fan!

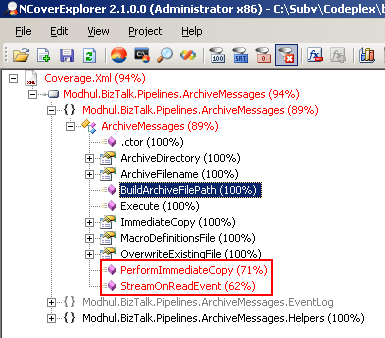

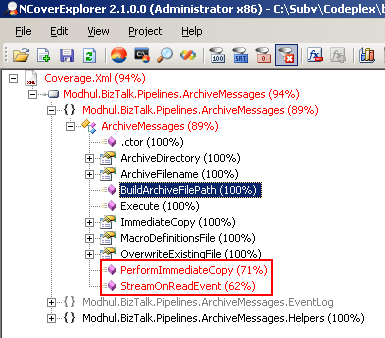

NCover is a code coverage tool which, to quote the website, “helps you test intelligently by revealing which tests haven’t been written yet.” In laymans terms, NCover graphically shows you which lines of code were not touched during the execution of your unit tests, allowing you to create tests accordingly to achieve 100% code coverage, as shown below:

Pipeline Component Testing

Armed with Tomas Restrepo’s excellent Pipeline Testing library, we can now comprehensively test our Pipeline Components:

1. Develop units tests to test your pipeline and ensure that the tests execute and are successful;

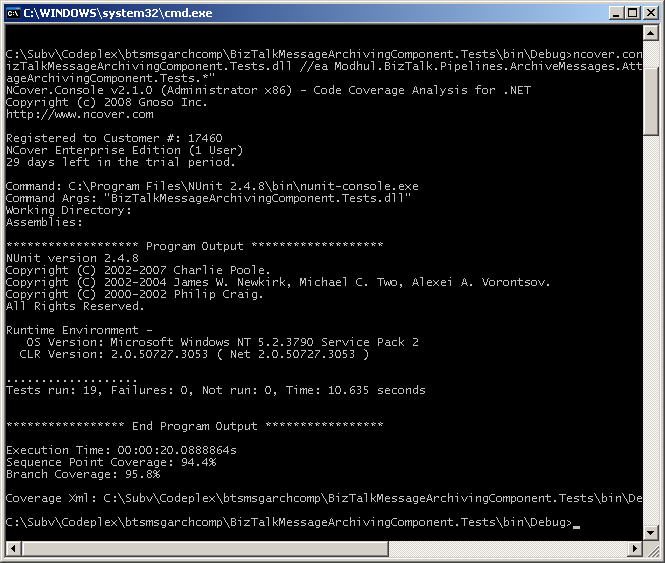

2. Invoke your testing framework from the command line to check that your tests will run correctly in the NCover environment (I’m using NUnit here, but you could also invoke VSTS):

"C:Program FilesNUnit 2.4.8binnunit-console.exe" BizTalkMessageArchivingComponent.Tests.dll

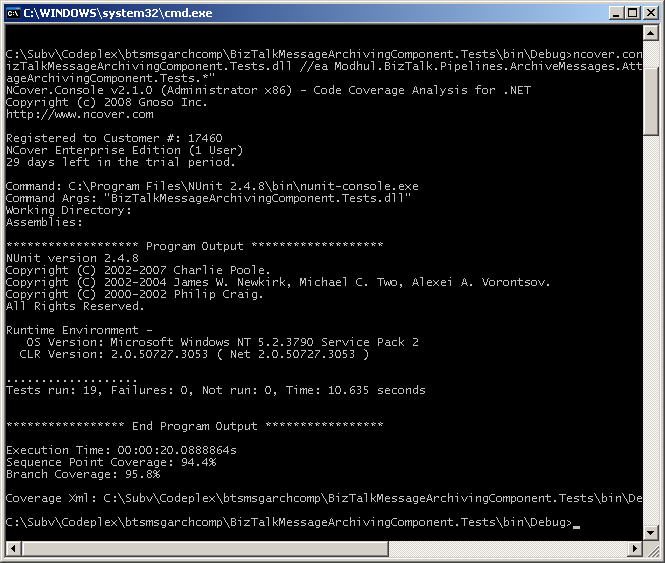

Which should produce the following output on the command line:

3. With our unit tests successfully executing, we can wrap the NUnit invocation in NCover loveliness which will inspect our code coverage while those tests executed:

ncover.console "C:Program FilesNUnit 2.4.8binnunit-console.exe" BizTalkMessageArchivingComponent.Tests.dll //ea Modhul.BizTalk.Pipelines.ArchiveMessages.Attributes.NCoverExcludeCoverage //et "Winterdom.*;BizTalkMessageArchivingComponent.Tests.*"

Producing command-line output similar to the following (notice the NUnit tests running between the ***Program Output*** and ***End Program Output*** text):

NCover has now determined the code coverage based of your unit tests and produces a Coverage.Xml file. This file contains information relating to the code coverage and can be loaded in the NCover.Explorer tool to produce a VS like environment that displays lines of code that were touched and un-touched by your unit tests.

4. Load the NCover.Explorer tool and open the Coverage.Xml file generated above, you will be presented with a screen detailing your code coverage. In the example below, you can see that the PerformImmediateCopy and StreamOnReadEvent methods do not have full code coverage – both have code which was not executed in our unit tests:

Clicking on one of the methods in the tree view loads the offending method, displaying the lines which were not executed by our tests in red; lines that were executed are displayed in blue:

Based on this information, we can now create tests to cater for these exceptions, ensuring we have 100% code coverage.

NCover is an excellent tool and although it isn’t free, I personally think its a must-have for any developers tool-kit.

![Reblog this post [with Zemanta]](https://i0.wp.com/img.zemanta.com/reblog_e.png)

I’m an avid user of virtual machines, primarily for development work on the desktop, but also in the Enterprise. For desktop virtualisation I’ve tried them all – Microsoft’s Virtual PC, VMWare Server and I’m now hooked on

I’m an avid user of virtual machines, primarily for development work on the desktop, but also in the Enterprise. For desktop virtualisation I’ve tried them all – Microsoft’s Virtual PC, VMWare Server and I’m now hooked on