In this post I’ll show you how to correctly configure diagnostics in an Azure Worker Role to push custom log files (NLog, Log4Net etc.) to Azure Storage using the in-built Azure Diagnostics Agent.

Configuring our Custom Logger – NLog

I’m not a massive fan of the recommended Azure Worker Role logging process, namely using the Trace.WriteLine() method as I don’t feel as though it provides sufficient flexibility for my logging needs and I think it looks crap when my code is liberally scattered with Trace.WriteLine() statements, code is art and all that.

NLog, on the other hand, provides all the flexibility I need including log file layout formatting, log file archiving – with granulatrity down to one minute – and archive file cleanup to name just a few. Having used it as the main logging tool on several projects, I feel completely at home with this particular library and want to use it within my Worker Role implementations.

I won’t go into how to add NLog (or any other logging framework you may use) to your project as there are loads of examples on the interweb, however I will share with you my Azure Worker Role app.config file which shows the configuration I have for my logging:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| <?xml version="1.0" encoding="utf-8"?> | |

| <configuration> | |

| <configSections> | |

| <section name="nlog" type="NLog.Config.ConfigSectionHandler, NLog" /> | |

| </configSections> | |

| <nlog xmlns="http://www.nlog-project.org/schemas/NLog.xsd" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"> | |

| <targets> | |

| <target name="MessageWorker" xsi:type="File" fileName="logs\MessageWorker_Current.log" layout="${longdate} ${level:uppercase=true:padding=5} ${processid} ${message}" archiveFileName="logs\MessageWorker_{#}.txt" archiveEvery="Day" archiveNumbering="Date" archiveDateFormat="yyyy-MM-dd_HH-mm-ss" maxArchiveFiles="14" concurrentWrites="false" keepFileOpen="false" encoding="iso-8859-2" /> | |

| </targets> | |

| <rules> | |

| <logger name="*" minlevel="Trace" writeTo="MessageWorker" /> | |

| </rules> | |

| </nlog> | |

| </configuration> |

On line 8 we define our MessageWorker target which instructs NLog to:

- Write our (current) log file to ‘MessageWorker_Current.log‘ (the fileName property) with a particular log-file layout (the layout property);

- Create archives every day (the archiveEvery property) with an archive filename of ‘MessageWorker_{#}.log‘ (the archiveFilename property) – the ‘{#}’ in the archive filename is expanded to a date/time when an archive file is created (the archiveNumbering and archiveDateFormat properties);

- Maintains a rolling 14 day window of archive files, deleting anything older (the maxArchiveFiles property);

- Not perform concurrent writes (the concurrentWrites property) or to keep files open (the keepFilesOpen property) which I discovered helps the Azure Diagnostics Agent consistently copy log files to Blob Sstorage – your mileage may vary with these settings.

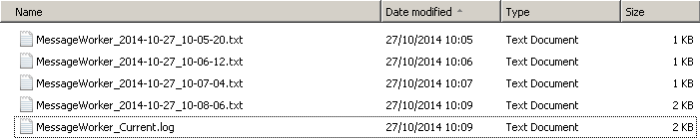

These configuration settings result in a number of files in our log directory – in the screenshot below I am archiving every minute:

Define a Local Storage Resource

It is recommended that custom log files are written to Local Storage Resources on the Azure Worker Role VM. Local Storage Resources are reserved directories in the file system of the VM in which the Worker Role instance is running. Further information about Local Storage can be found online at MSDN.

In order to define Local Storage, we add a LocalResources section to the ServiceDefinition.csdef file and define our ‘CustomLogs’ Local Storage, as shown on line 16 below. The cleanOnRoleRecycle tell Azure not to delete the Local Storage if the role is recycled (restarted or scaled up/down) and the sizeInMB tells Azure how much Local Storage to allocate on the VM.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| <?xml version="1.0" encoding="utf-8"?> | |

| <ServiceDefinition name="MessageWorker" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition" schemaVersion="2014-06.2.4"> | |

| <WorkerRole name="MessageWorker.WorkerRole" vmsize="Small"> | |

| <Imports> | |

| <Import moduleName="Diagnostics" /> | |

| <Import moduleName="RemoteAccess" /> | |

| <Import moduleName="RemoteForwarder" /> | |

| </Imports> | |

| <ConfigurationSettings> | |

| <Setting name="ServiceBusConnectionString" /> | |

| <Setting name="StorageConnectionString" /> | |

| <Setting name="MinimumLogLevel" /> | |

| </ConfigurationSettings> | |

| <LocalResources> | |

| <LocalStorage name="DiagnosticStore" cleanOnRoleRecycle="false" sizeInMB="8192" /> | |

| <LocalStorage name="CustomLogs" cleanOnRoleRecycle="false" sizeInMB="1024" /> | |

| </LocalResources> | |

| </WorkerRole> | |

| </ServiceDefinition> |

On an Azure Worker Role VM, the Local Resources directory could be found at: C:\Resources\Directory\[GUID].[WorkerRoleName]

On a local development machine, the Local Resources directory can be found at: C:\Users\[USER]\AppData\Local\dftmp\Resources\[GUID]\directory\CustomLogs

Configure NLog to Write Log Files to the Local Storage Resource

When using Local Storage Resources, we don’t know the actual directory name for the resource (and therefore our log files) until runtime. As a result, we need to update our NLog Target’s fileName and archiveFilename property as the Worker Role starts. To do this, we need a small helper to re-configure the targets:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| public static class LogTargetManager | |

| { | |

| private static Logger logger = LogManager.GetCurrentClassLogger(); | |

| public static void SetLogTargetBaseDirectory(string targetName, string baseDirectory) | |

| { | |

| logger.Debug("SetLogTargetBaseDirectory() – Log Target Name: {0}.", targetName); | |

| logger.Debug("SetLogTargetBaseDirectory() – (New) Base Directory: {0}.", baseDirectory); | |

| var logTarget = (FileTarget)LogManager.Configuration.FindTargetByName(targetName); | |

| if (logTarget != null) | |

| { | |

| // Capture the Target's current Filename and Archive Filename | |

| var currentTargetFilename = Path.GetFileName(logTarget.FileName.ToString().TrimEnd('\'')); | |

| var currentTargetArchiveFilename = Path.GetFileName(logTarget.ArchiveFileName.ToString().TrimEnd('\'')); | |

| // Re-base the Target's Filename and Archive Filename Directory to that supplied in 'baseDirectory'. | |

| logTarget.FileName = Path.Combine(baseDirectory, currentTargetFilename); | |

| logTarget.ArchiveFileName = Path.Combine(baseDirectory, currentTargetArchiveFilename); | |

| logger.Debug("Logger Target '{0}' Filename re-based to: {1}", targetName, logTarget.FileName); | |

| logger.Debug("Logger Target '{0}' Archive Filename re-based to: {1}", targetName, logTarget.ArchiveFileName); | |

| } | |

| // Re-configure the existing logger with the new logging target details | |

| LogManager.ReconfigExistingLoggers(); | |

| } | |

| } |

This helper is passed the NLog Target to be updated and the directory path of the Local Storage Resource that is made available at runtime:

- On line 11, we retrieve the FileTarget from our NLog configuration based on the supplied Target name.

- On lines 16 & 17, we retrieve the current log filename and the archive filename (stripping a trailing apostrophe which appears to come from somewhere).

- On lines 20 & 21, we combine the current log filename and archive filename with the supplied base directory (which contains the Local Storage directory passed to this method).

- On line 28, we re-configure the existing loggers, activating these changes.

This helper is called within the Worker Role’s OnStart() method:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| public class MessageWorker : RoleEntryPoint | |

| { | |

| /* This GIST only demonstrates the OnStart() method. */ | |

| public override bool OnStart() | |

| { | |

| Trace.WriteLine("Starting MessageWorker Worker Role Instance…"); | |

| // Initialize Worker Role Configuration | |

| InitializeConfigurationSettings(); | |

| // Re-configure Logging (update logging to runtime configuration settings) | |

| LogTargetManager.SetLogTargetBaseDirectory("MessageWorker", RoleEnvironment.GetLocalResource("CustomLogs").RootPath); | |

| LogLevelManager.SetMinimimLogLevel(_workerRoleConfiguration.MinimumLogLevel); | |

| // Formally start the Worker Role Instance | |

| logger.Info("Starting Worker Role Instance (v{0})…", Assembly.GetExecutingAssembly().GetName().Version.ToString()); | |

| // Log Configuration Settings | |

| LogConfigurationSettings(); | |

| // Set the maximum number of concurrent connections | |

| ServicePointManager.DefaultConnectionLimit = 12; | |

| return (base.OnStart()); | |

| } | |

| } |

On line 15, we call the SetLogTargetBaseDirectory() method passing the NLog Target from our app.config file and the directory of the Local Stroage Resource, obtained by calling:

RoleEnvironment.GetLocalResource(“CustomLogs”).RootPath

where “CustomLogs” is the name of the Local Storage defined in the ServiceDefinition.csdef file (see the earlier Define a Local Storage Resource section). RoleEnvironment is a class located in the Microsoft.WindowsAzure.ServiceRuntime namespace.

Configuring Azure Diagnostics

Our final step in the process is to configure diagnostics, which instructs the Azure Diagnostics Agent what diagnostics data should be captured and where it should be stored. This configuration can be performed either by configuration (via the diagnostics.wadcfg file), or through code in the OnStart() method of the Worker Role. Be aware that there is an order of precendence for configuration diagnostic data – take a look at Diagnostics Configuration Mechanisms and Order of Precedence for more information.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| <?xml version="1.0" encoding="utf-8"?> | |

| <DiagnosticMonitorConfiguration configurationChangePollInterval="PT5M" overallQuotaInMB="4096" xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration"> | |

| <DiagnosticInfrastructureLogs scheduledTransferPeriod="PT5M" /> | |

| <Directories scheduledTransferPeriod="PT1M"> | |

| <CrashDumps container="wad-crash-dumps" /> | |

| <DataSources> | |

| <DirectoryConfiguration container="wad-nlog2" directoryQuotaInMB="512"> | |

| <LocalResource relativePath="." name="CustomLogs" /> | |

| </DirectoryConfiguration> | |

| </DataSources> | |

| </Directories> | |

| <Logs bufferQuotaInMB="1024" scheduledTransferPeriod="PT15M" scheduledTransferLogLevelFilter="Error" /> | |

| <PerformanceCounters bufferQuotaInMB="512" scheduledTransferPeriod="PT15M"> | |

| <PerformanceCounterConfiguration counterSpecifier="\Memory\Available MBytes" sampleRate="PT3M" /> | |

| <PerformanceCounterConfiguration counterSpecifier="\Processor(_Total)\% Processor Time" sampleRate="PT1S" /> | |

| <PerformanceCounterConfiguration counterSpecifier="\Memory\Committed Bytes" sampleRate="PT1S" /> | |

| <PerformanceCounterConfiguration counterSpecifier="\LogicalDisk(_Total)\Disk Read Bytes/sec" sampleRate="PT1S" /> | |

| <PerformanceCounterConfiguration counterSpecifier="\Process(WaWorkerHost)\% Processor Time" sampleRate="PT1S" /> | |

| <PerformanceCounterConfiguration counterSpecifier="\Process(WaWorkerHost)\Private Bytes" sampleRate="PT1S" /> | |

| <PerformanceCounterConfiguration counterSpecifier="\Process(WaWorkerHost)\Thread Count" sampleRate="PT1S" /> | |

| </PerformanceCounters> | |

| <WindowsEventLog bufferQuotaInMB="1024" scheduledTransferPeriod="PT30M" scheduledTransferLogLevelFilter="Error"> | |

| <DataSource name="Application!*" /> | |

| </WindowsEventLog> | |

| </DiagnosticMonitorConfiguration> |

The important lines for our custom logging solution are found in the <DataSources> element between lines 6 & 10:

- Line 7 – <DirectoryConfiguration> details the Azure Storage Container where the custom log files should be written; and

- Line 8 – <LocalResource> details the Local Storage Resource where the log files reside.

Bringing it all Together

Lets recap on the various moving parts we needed to get this up and running:

- Configure the app.config file for your Worker Role, adding the required NLog configuration. Check that this is working locally before trying to push the settings to the cloud.

- Define a Local Storage Resource in your the ServiceDefinition.csdef file for your Cloud Service Project where log files will be written to – in this blog post we have called our resource ‘CustomLogs’.

- Add functionality to update the NLog target’s filename and archive filename at runtime by querying the Local Resource’s Root Path in the Worker Role’s OnStart() method.

- Add a DataSources section to the diagnostics.wadcfg file instructing the Azure Diagnostics Agent to push custom log files from the Local Storage Resource to the specified Azure Blob Container.

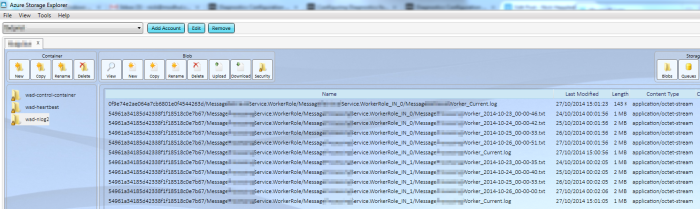

With all of the required pieces in place we can deploy to our Cloud Service on Azure as usual, either through Visual Studio or the Management Portal. Give the service time to spin-up and we should hopefully start to see our log files appear in the specified container within Azure Storage – in the screenshot below, log files are being archived once a day and copied to the Storage Container at approx. 2 mins past midnight every morning:

Gotchas

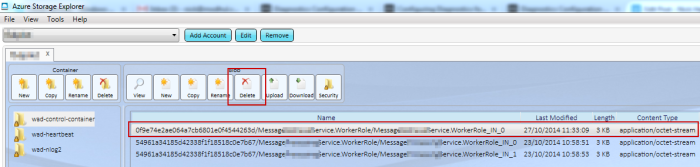

In closing, there is one gotcha that I would like to highlight. When a Cloud Service Project is deployed to a Cloud Service in Azure, the diagnostics configuration (derived from the diagnostics.wadcfg file) is written to a control configuration blob in the wad-control-container container, as specified in the Diagnostics Connection String (setting Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString) from the deployed ServiceConfiguration.Cloud.cscfg configuration file.

The purpose of this file is detailed as follows in the MSDN article Diagnostics Configuration Mechanisms and Order of Precedence:

A control configuration blob will be created for each role instance whenever a role without a blob starts. The wad-control-container blob has the highest precedence for controlling behavior and any changes to the blob will take effect the next time the instance polls for changes. The default polling interval is once per minute.

In order for any changes that you make to the diagnostics.wadcfg file to take effect, you will need to delete this control configuration blob and have Azure re-create it when the role re-starts. Otherwise, Azure will continue to use the outdated diagnostics configuration from this control configuration blob and log-files won’t be copied to Azure Storage.