Originally posted by Nick Heppleston at: http://www.modhul.com/2009/06/29/configuring-biztalk-for-disaster-recovery-part-1/

I’m often surprised by the lack of disaster recovery capability organisations have for BizTalk, the novel (read: ‘unsupported’) approaches taken to provide a theoretical level of cover that is then never tested, or the lengthy procedures (24 hours +) for a task that should be as simple as pressing ‘the big read panic button’ when things go horribly wrong.

In this three-part series on the BizTalk Backup and Restore process, I’m going to look at one of the two supported methods to backup and restore a BizTalk Server environment – Log Shipping. The series will cover the following topics:

- In Part 1, I’ll provide a high-level overview of the backup and restore process.

- In Part 2, I’ll walk through an actual backup and restore setup, looking at the configuration steps as we go.

- In Part 3, I’ll demonstrate a long-running BizTalk application that is restored on a sample disaster recovery environment using the backup and restore process configured in Part 2.

BizTalk Backup and Restore – How Distributed Transactions Complicate Things

Before we look at how the BizTalk backup and restore process works, lets take a step back and look at why BizTalk’s use of distributed transactions mean we must use the supplied backup and restore jobs.

Distributed Transactions and Transactional-Log Marking

BizTalk uses distributed transactions to maintain consistency of its actions across several databases. To ensure that these distributed transactions are consistent across all databases after a restore, the BizTalk Backup Job uses Transactional-Log Marking to mark the transaction-logs of all BizTalk databases participating in the backup.

The marking transaction occurs when the BizTalk Backup Job runs: the job blocks new distributed transactions from starting, waits for currently running distributed transactions to complete, and then executes to place the marking transaction; once complete, distributed-transactions waiting for the marking process to finish can continue processing.

If we didn’t use transactional-log marking, the following scenario may occur: we have two databases which are being written to as part of a distributed transaction: if a transaction-log backup is taken (without using transaction log marking) from database-one before the transaction-log backup is taken on database-two, when we restore the two databases the distributed transaction is in an inconsistent state – committed on database-one, but there is no evidence of it on database-two.

The important point to consider here is that because of the distributed transactions, the databases need to be restored in the same transactionally consistent state. If we did not use the BizTalk Backup Jobs and the transaction-log marking feature, we cannot acheive this consistency.

Why is SQL Server Mirroring not Supported?

So why can’t we just use SQL Server Mirroring to transfer the transaction-logs from the live to disaster-recovery environments? Unfortunately, SQL Server Mirroring does not support distributed transactions: after a failover event, the new principal server contacts the MSDTC service, however the service has no knowledge of the new principal server. As a result, the MSDTC service unexpectedly terminates any transactions that are “preparing to commit”, while other transactions may already have comitted.

If you’re tempted to use mirroring to backup your BizTalk databases, don’t! Mirroring is not supported by Microsoft as a backup strategy. As old-fashioned as it is, Log Shipping is fully supported in all versions and all editions of SQL Server, the log shipping jobs are relatively easy to configure and provides all of the distributed transaction/transactional-log marking functionality required to ensure a consistent restore in a disaster recovery situation.

Further information on mirroring and distributed transactions can be found on MSDN: Database Mirroring and Cross-Database Transactions.

The Backup and Restore Process

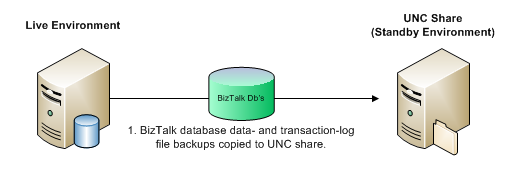

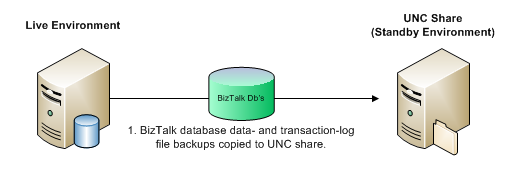

The backup and restore process – otherwise known as ‘Log Shipping’ in MSDN documentation – is relatively easy; we’ll cover the various steps in more detail below, however to give you an overview, the process is as follows:

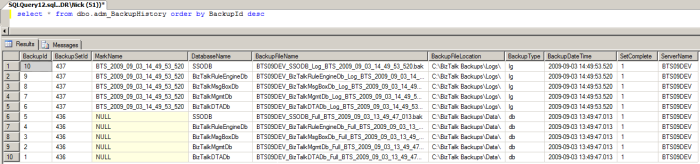

- The BizTalk Backup SQL Agent Job creates data- and transaction-log backups from the production environment, placing log marks into the transaction-log backups. An audit table is maintained which lists the backups that have been created, the type of backup (data or log backup) and details the location of the backup files.

- The BizTalk disaster recovery environment reads the audit table maintained by the production environment, using the information to determine which data- and transaction-log files need to be restored. These files are restored using the SQL ‘NORECOVERY’ option, leaving the databases in a non-operational state, allowing additional transaction-logs to be applied. Note: the transaction-logs are not restored to the transaction-log mark in this step, instead the full transaction-log is restored.

These two steps continue throughout live operations. In the event of a disaster recovery situation, where the production environment has been lost and the standby environment needs to be brought online, three recovery steps must be performed on the standby environment:

- The most recent transaction-log backup is restored to its transaction-log mark using the SQL ‘WITH RECOVERY’ option, placing the databases into an operationally ready state.

- Database and registry updates statements are run to re-configure the restored databases to use the standby environment.

- BizTalk services are brought online (including the Enterprise Single Sing-On server and Business Rules Engine service etc.) and the standby environment is made live.

Once the live environment is recovered and the switch from the standby environment back to the live environment is ready to be made, the process is reversed, with the standby environment creating backups that are restored to the live environment.

The Backup Process

We’re now ready to take a deeper look into how the backup and restore process works from a high-level, as shown in the diagram above. In Part II we will look at configuring the backup and restore process itself.

Before we continue however its worth taking a moment to look at a fundamental concept within the backup process: Backup-Sets.

Backup Sets

A backup-set is a group of database backups that operate as a logical whole. During the backup process, all of the databases within the backup-set must be successfully backed-up, or the backup-set will be considered incomplete; likewise, when restoring, all of the databases within the backup-set must be successfully restored, or the restoration will be considered incomplete (although there are steps within the restore jobs that handle incomplete restores automatically). This concept ensures that all of the BizTalk databases are backed-up and restored to the same point, ensuring consistency in a disaster-recovery situation.

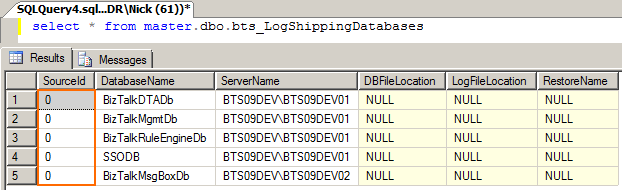

At a minimum, a backup-set must contain the BizTalk databases listed below, however it can also contain any custom application databases that need the same transactional consistency as the BizTalk databases:

- Management database – BizTalkMgmtDb

- MessageBox database – BizTalkMsgBoxDb

- Tracking database – BizTalkDTADb

- Rule-Engine database – BizTalkRuleEngineDb

- Enterprise Single Sign-On database – SSODb

The Backup BizTalk Server Job

Out of the box, a default BizTalk installation will create a SQL Agent job called Backup BizTalk Server. This job creates either full- or transactional-log backup-sets of the BizTalk databases ready for restoring in a disaster situation; it is also responsible for writing the transactional-log mark discussed earlier. The job will run by default every 15 minutes, however this can be configured to a time-interval that suits your environment.

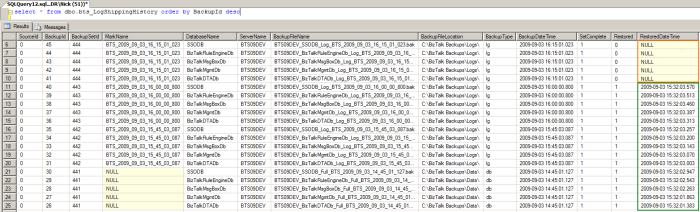

The job also maintains a backup history which is read by the standby environment to determine what backups are available for restoration; the history also provides auditing capabilities that allow the backup job to recover when only partial backup-sets are created.

The Backup BizTalk Server job performs three steps:

1. Backup Full

Performs a full backup of the BizTalk databases (BizTalkMgmtDb, BizTalkMsgBoxDb, BizTalkDTADb etc.) and writes to the backup history tables once a backup-set has been created.

A full backup is performed if the ForFullBackup flag has been set, or if the job executes in the scheduled window for a full-backup. The scheduled window is determined by the value passed to the Frequency parameter in the Job Step properties – values can either be ‘H’ (hourly) or ‘D’ (daily – 12 midnight), the job default is daily full-backups.

The .BAK files generated by this step are written to the location specified by the Location of Backup Files parameter in the Job Step properties. This should be a highly-available UNC Share that is accessible by the DR environment in a disaster situation.

2. Mark and Backup Log

Performs a transactional-log mark and subsequent backup of the BizTalk database transaction-logs, writing to the backup history tables once a backup-set has been created. Before the log mark is applied, new distributed transactions are blocked, while in-process distributed transactions are allowed to complete. Once the log-mark has been applied, the block on new distributed-transactions is released.

The transactional-log .BAK backup files generated by this step are written to the location specified by the Location of Backup Files parameter in the Job Step properties. Again, this needs to be a highly-available UNC Share that is accessible by the standby environment in a disaster situation.

3. Clear Backup History

The final step deletes the backup-set histories from the backup history tables based on the value specified by the Days to Keep parameter in the Job Step properties; the default is 14 days.

With the Backup job configured and running, we can focus our attention on the restoration of the data and transaction-log files in our standby environment.

The Restore Process

The BizTalk Restore jobs – otherwise know as ‘Log Shipping’ jobs on MSDN – operate on the standby environment and restore the data- and transaction-log files created in the steps detailed above. Transaction logs are continually recovered using the NORECOVERY option which leaves the databases in the ‘Restoring’ state, allowing additional transaction-logs to be applied. In the event of a disaster situation, where the standby environment needs to be brought online as the replacement for the live environment, the transaction-logs are restored to the transaction-log mark using the WITH RECOVERY option, placing the database into an operationally ready state. The various BizTalk services then need to be brought online.

Configuration of the Log Shipping Jobs

Before the log shipping jobs can be started, they must first be configured and prepared for first use – this is a one-off task when configuring log shipping. The log shipping jobs, supporting tables & stored procedures are created by executing two SQL scripts in the master database. The standby environment is finally configured, which creates the necessary connections between the two environments, along with the log shipping SQL Agent jobs themselves. This connection between the two systems allows the standby environment to obtain information about the backups that have been performed (Step 1 in the diagram above) – and therefore need to be restored (Step 2.) – and the location of the data- and transaction-log backup files themselves.

The BizTalk Log Shipping Jobs

Following configuration of the standby environment, three new SQL Agent jobs will be created to perform the daily recovery of data- and transactional-log backup files; in the event of the standby environment being made live, they also restore the databases to the last known transactional-log mark.

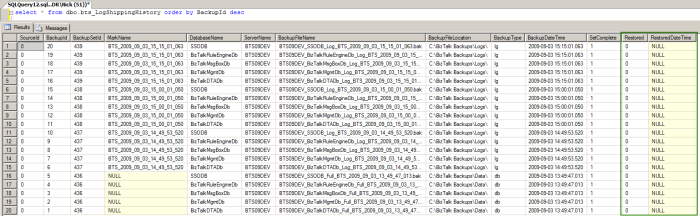

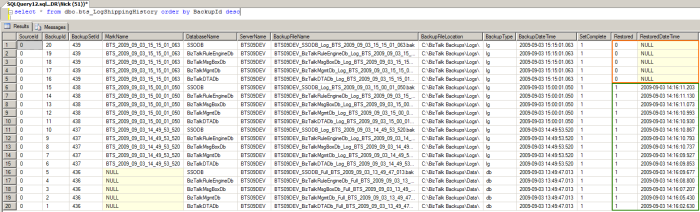

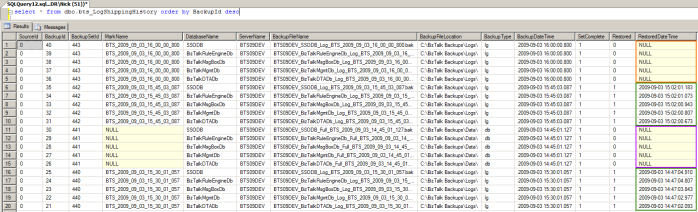

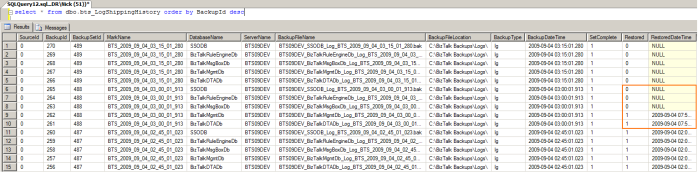

A restore history is maintained by the restore jobs (which is separate to the backup history); this restore history provides auditing capabilities that allow the jobs to recover when only partial backups are successfully restored.

The following three jobs are created to provide log shipping capabilities of the backup files:

1. The ‘BTS Log Shipping – Get Backup History’ Job

This job queries the live SQL Server history tables to determine which backups have been performed and identify the location of the respective data and transaction-log backup files, ready for restoration in the following ‘Restore Databases’ job. This job executes every minute.

2. The ‘BTS Log Shipping – Restore Databases’ Job

This job restores the backup data- and log-files to the standby SQL Server in a non-operational state, allowing further transaction-logs to be restored. The job executes every minute and will continue to execute while there are data- or log-files to restore (so if you see this job running continually, its possible that you have a backlog of files that are being restored).

The job identifies either the next transaction-log or full backup-set to to apply, and restores the necessary files with the ‘NORECOVERY’ option, leaving each database in the ‘Restoring…’ state; this allows additional transaction-logs to be applied. Data-files take priority over transaction-log files in the restore order, which ensures that daily full backups are restored before further transaction-log backups. Once a data- or transaction-log backup has been restored, the log shipping history table in the standby environment is updated to record the status of the restore operation.

Even though this job executes every minute, the restore will only take place for transaction-logs where there are two or more transaction-log backup-sets to restore; this is to ensure that we always have one transaction-log that can be restored to the transaction-log mark (see the ‘Restore to Mark’ job below). In practice, this means that the restore of the current transaction-log will not happen until the next transaction-log is received, a delay that is determined by the frequency of the Backup BizTalk Server job, which is by default scheduled to execute once every 15 minutes.

In the event of a disaster recovery situation, where the production environment has been lost and the standby environment needs to be brought online, we execute the third log shipping job, which is disabled by default:

3. The ‘BTS Log Shipping – Restore to Mark’ Job

This job restores all of the databases to an operational state, by restoring the next available transaction-log to their last log mark; finally, the job creates SQL Agent jobs. This job has no schedule and is intended to be run by the DBA when the standby SQL Server is to be brought online in a disaster recovery event.

The stored procedure called by this job restores the final transaction log using the ‘WITH RECOVERY’ option, placing the database into an operationally ready state. Furthermore, the restore operation ensures that the transaction-log is restored to the last log mark, ensuring that all databases are restored to exactly the same point.

Finally, the stored procedure drops and re-creates the SQL Agent jobs on the standby SQL Server that were present on the live SQL Server; this is to ensure that any changes that have been made to the default configuration of these jobs is migrated to the standby environment before it is brought online.

Note: This job is disabled by default and only executed when the standby environment is to be brought online.

Once the Restore to Mark job has been executed, two further scripts need to be executed so the restored BizTalk databases points to the standby SQL Server instance and not to the old live SQL Server instance:

- The first script updates the SQL Server instance name in the BizTalk Management, Rule Engine, HWS and BAM databases and SQL Agent jobs.

- The second script updates the SQL Server instance name in the registry for the BizTalk Management, Rule Engine, HWS and EDI databases.

Finally, the BizTalk Host Instances, Rule Engine Update, Enterprise Single Sign-On and WMI services need to be started. Once fully started, the standby environment is considered live and operational.

Wrap-Up

So that’s the end of Part 1. We’ve looked at how the BizTalk Backup and Restore process works at a high-level – how to backup, restore and bring our standby environment online in a disaster event.

In Part 2, we’ll walk through configuring the backup and restore jobs; in Part 3, we’ll demonstrate a long-running BizTalk application that is restored on a standby environment using the backup and restore process configured in Part 2.

BizTalk DR: Configuring BizTalk Backup for Disaster Recovery – Part 2

![Reblog this post [with Zemanta]](https://i0.wp.com/img.zemanta.com/reblog_e.png)